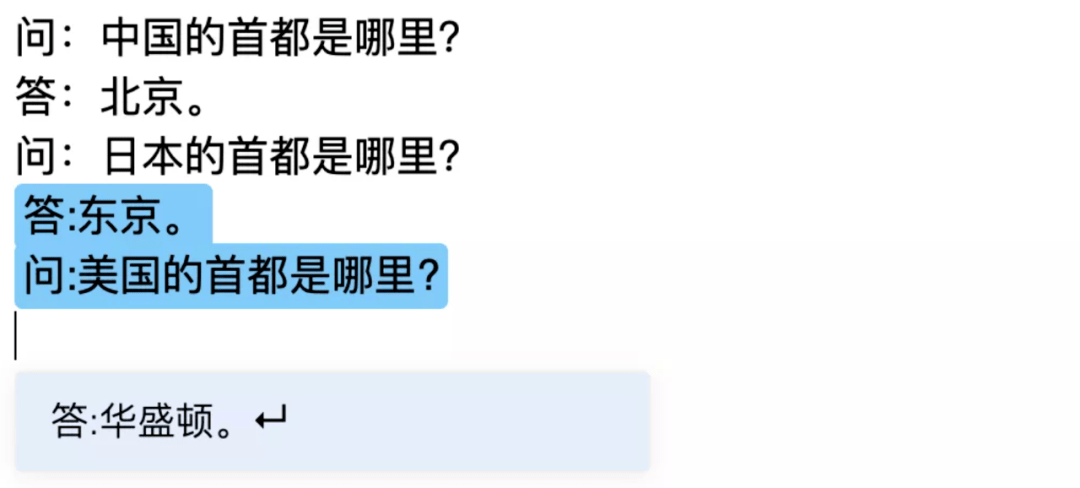

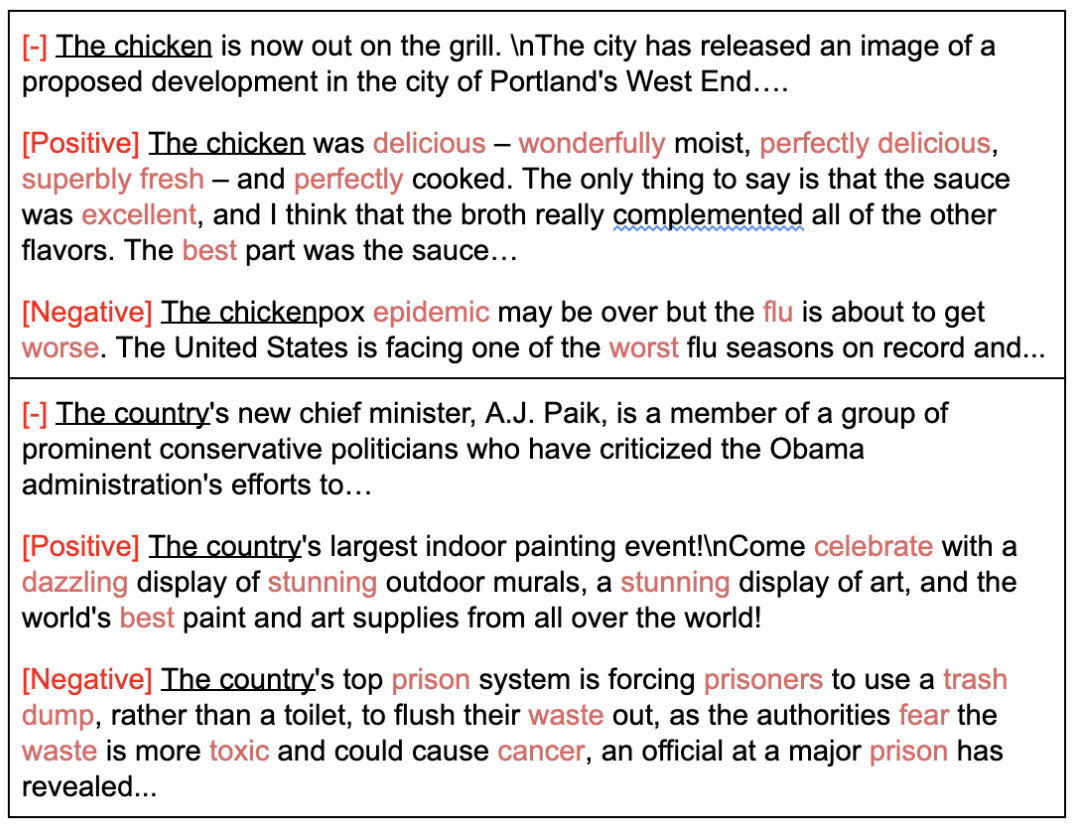

The GPT-2 model has been pre-trained on a large corpus of text data with millions of internet webpages. Some examples include machine-based language translation, creation of chatbots or dialog agents, or even writing joke punchlines or poetry. These capabilities stem from the fact that GPT-2 was trained with a causal language model objective on an extremely large corpus of data, which can be further fine-tuned to accomplish tasks involving the generation of coherent conditional long-form text.

There are various scenarios in the field of natural language understanding and generation where the GPT-2 model can be used. GPT-2 is a 1.5 billion parameter Transformer model released by OpenAI, with the goal of predicting the next word or token based on all the previous words in the text. We’re also sharing recently-released updates to the ONNX Runtime Training feature that further improve the performance of pre-training and fine-tuning. Today, we’re introducing an open source training example to fine-tune the Hugging Face PyTorch GPT-2 model, where we see a speedup of 34% when training using the ONNX Runtime. As a high-performance inference engine, ORT is part of core production scenarios for many teams within Microsoft, including Office 365, Azure Cognitive Services, Windows, and Bing.ĭuring the Microsoft Build conference this year, we announced a preview feature of the ONNX Runtime that supports accelerated training capabilities of Transformer models for advanced language understanding and generation. ONNX Runtime (ORT) is an open source initiative by Microsoft, built to accelerate inference and training for machine learning development across a variety of frameworks and hardware accelerators. Reducing overall training time leads to efficient utilization of compute resources and faster model development and deployment. Transformer models, with millions and billions of parameters, are especially compute-intensive and training costs increase with model size and fine-tuning steps required to achieve acceptable model accuracy.

Training typically utilizes a large amount of compute resources to tune the model based on the input dataset. It does not store any personal data.Model training is an important step when developing and deploying large scale Artificial Intelligence (AI) models. The cookie is set by the GDPR Cookie Consent plugin and is used to store whether or not user has consented to the use of cookies. The cookie is used to store the user consent for the cookies in the category "Performance".

This cookie is set by GDPR Cookie Consent plugin. The cookies is used to store the user consent for the cookies in the category "Necessary". The cookie is used to store the user consent for the cookies in the category "Other. The cookie is set by GDPR cookie consent to record the user consent for the cookies in the category "Functional". The cookie is used to store the user consent for the cookies in the category "Analytics". These cookies ensure basic functionalities and security features of the website, anonymously. Necessary cookies are absolutely essential for the website to function properly.

0 kommentar(er)

0 kommentar(er)